InsightXR intends to enable multiple users to visualize 3D designs and collaborate on those designs using augmented and virtual reality technology asynchronously. The proposed research in this project focuses on a user’s subjective feedback and preferences, where the qualitative feedback impacts the optimization process through merging with quantitative data. User’s qualitative feedback as well as their physiological response are used in each generation to inform new designs using our proposed interactive genetic algorithm (IGA). InsightXR is the recipient of CTO’S Berkeley Changemaker Grant. You can read more about the project here.

We introduce a 6DOF virtual reality daylight analysis tool, RadVR, for daylighting-based design and simulations, that allows simultaneous comprehension of qualitative immersive renderings to be analyzed with quantitive physically correct daylighting calculations.

The BAMPFA Augmented Reality Project makes visible the challenges of transforming the University’s old printing facilities into a world-class museum designed by architects Diller Scofidio + Renfro. The project makes use of multimodal AR by superimposing 3D models, animations, photography and video into physical objects. The story of the new BAMPFA is uncovered through the lens of an iPad pointed at a physical model of the museum, or at the building itself.

XR Maps of Berkeley investigates the potential of VR to make visible and accessible the wealth of research and creativity happening across the Berkeley campus. The project consisted on the creation of six immersive environments to generate user experiences that uniquely convey on-going research or creative work at Berkeley, using a combination of scientific/technical information, experiential environments and game playing. Student groups were responsible for the creation of each environment, in the context of a graduate seminar. Results from this project are intended to act as demonstrators for the future expansion of VR/AR work horizontally across campus.

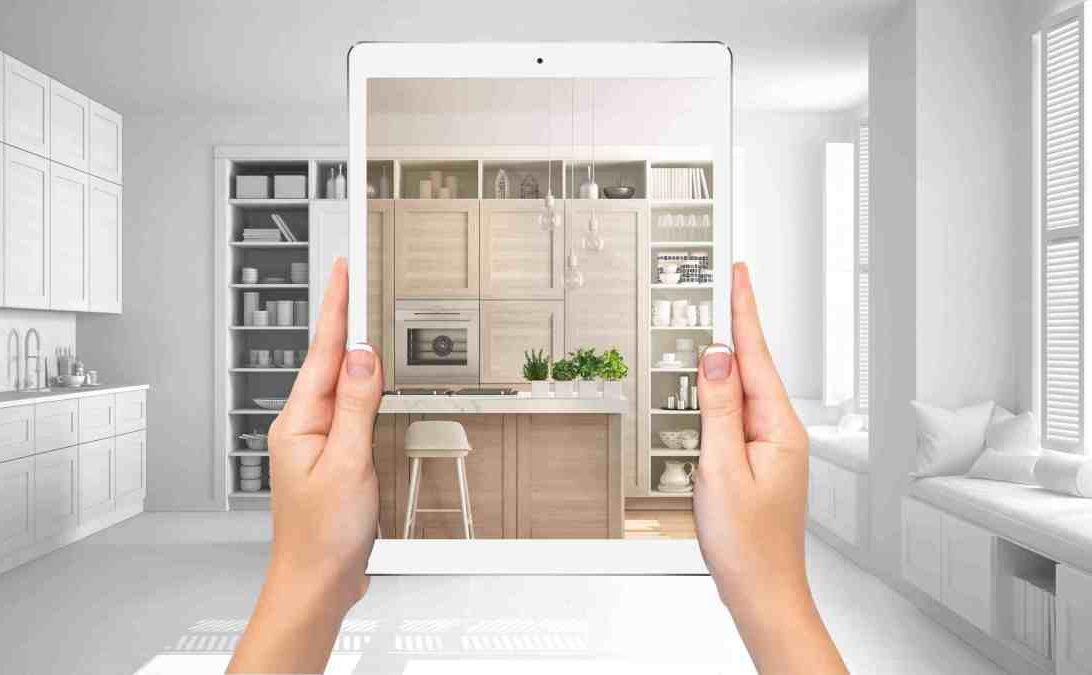

Generating dynamic spatial environments can facilitate productivity and efficiency for future AR/VR workplaces by allowing custom working spaces for various tasks and working settings, all adapted to the real-world contains the users surrounding environments. By taking advantage of generative design and 3D recognition methodologies, we envision our proposed the system would play a major role in future augmented reality and virtual reality spatial interaction workflows and facilitate automated space layouts.

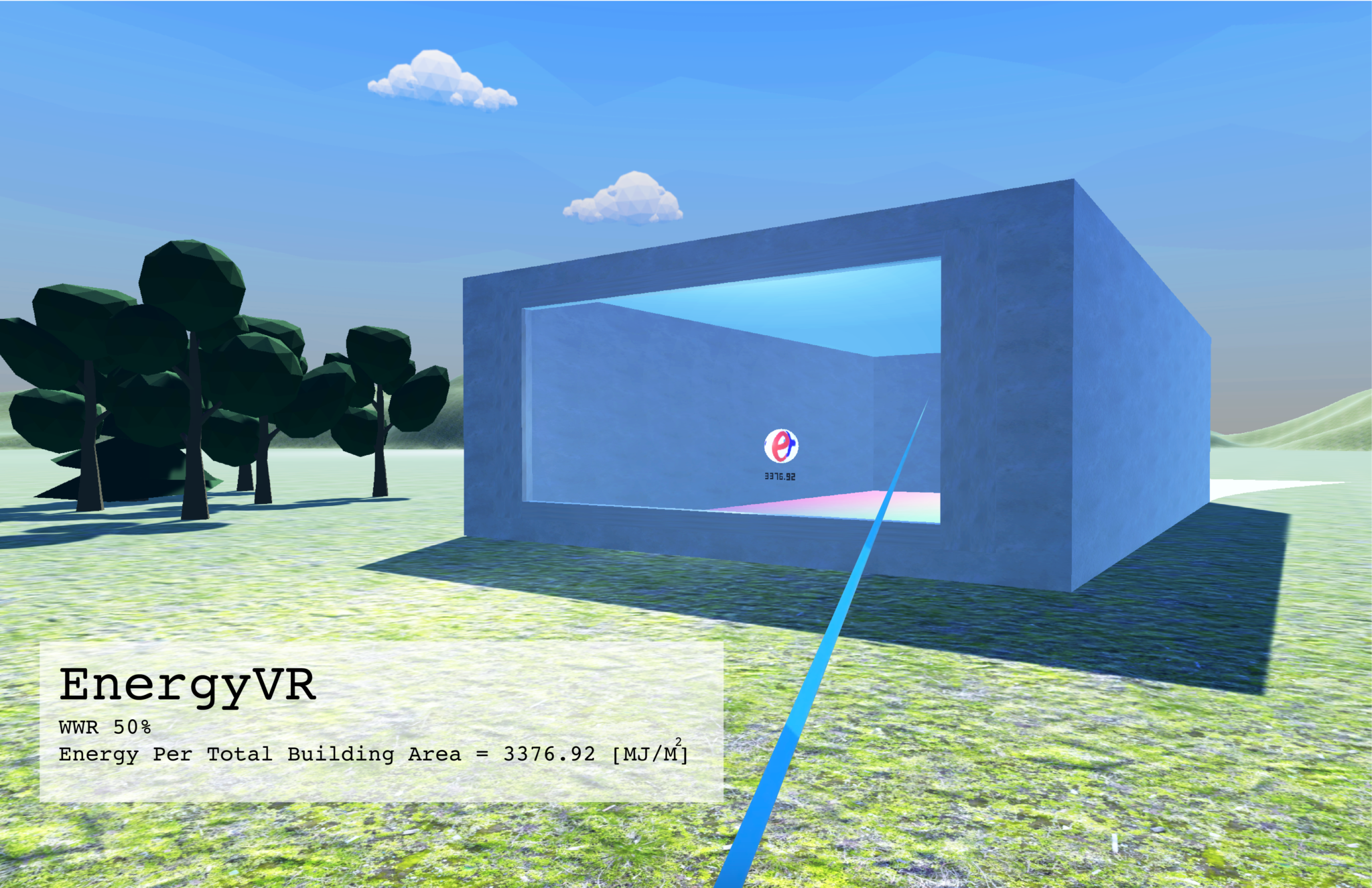

EnergyVR is a virtual reality energy analysis tool that uses EnergyPlus to help designers make informed design decisions on low-energy buildings. Users can analyze their design real-time based on changes made spatially or on different properties of the envelope, all in an immersive experience.

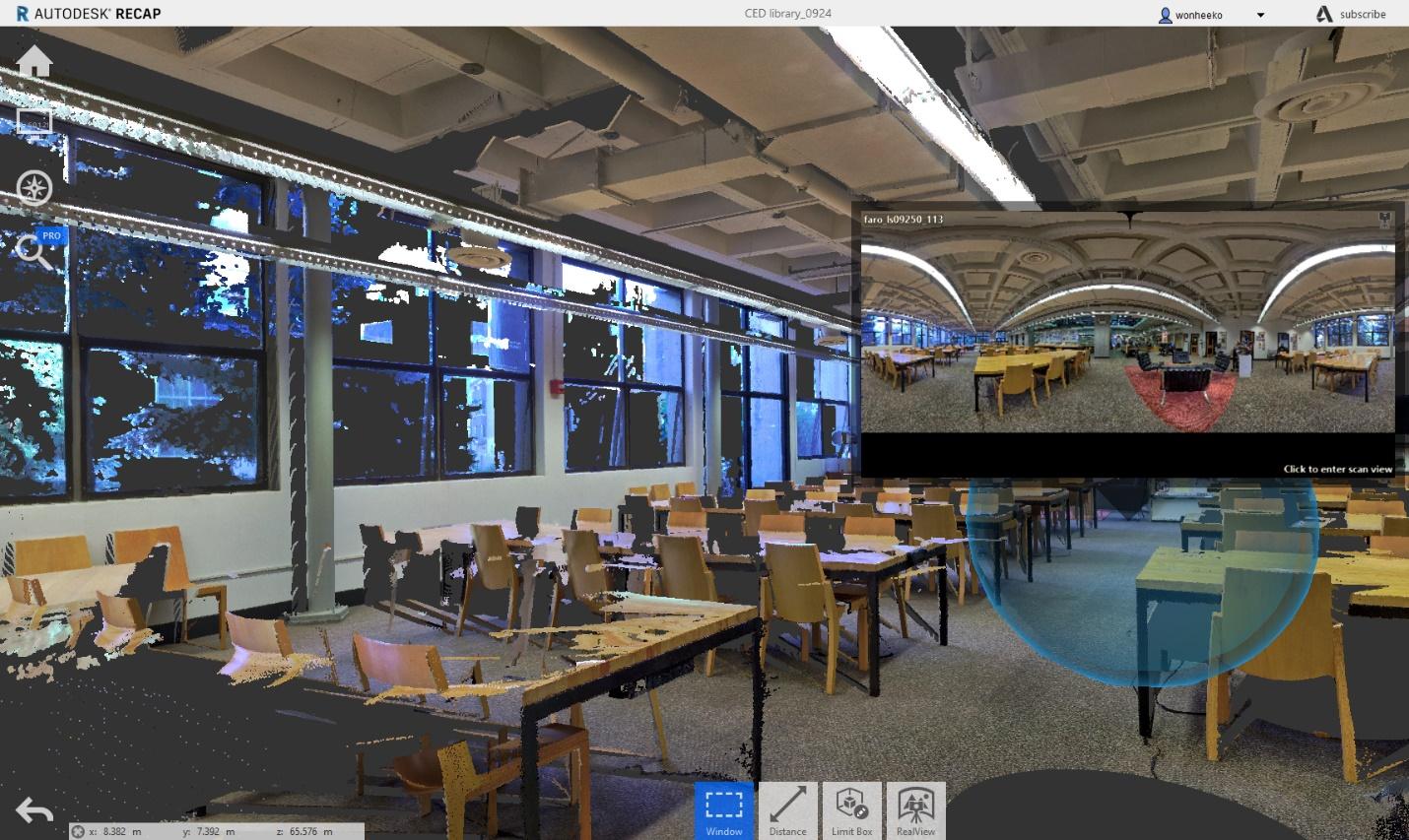

The use of VR with 3D scanned and 360 ° fish-eye High Dynamic Range (HDR) photography images is a relatively new research approach for the built environment. To study the impact of outside views occupants, we reproduce the 3D scanned and HDR images into immersive VR scenes, where research participants are able to experience various view conditions using a 6DOF VR headset.

Co.DesignX in VR-AR explores a novel methodology of using immersive environments and experiences within the current design workflow process of healthcare facilities for collaborative design with stakeholders. The research uses the evidence-based design approach to evaluate the impact of design and health in healthcare spaces. It is proposed as a participatory research design involving patients, staff and professionals as co-designers collaborating to create and deliver an experience.